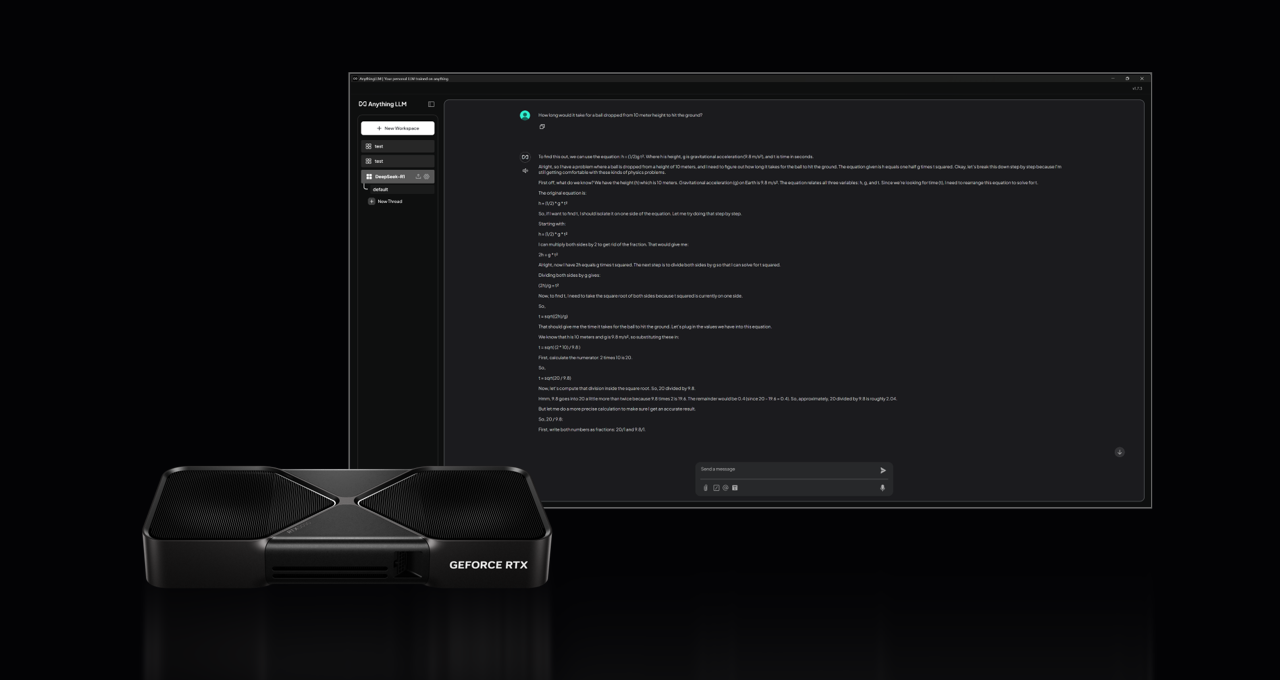

DeepSeek can automate routine tasks, improving effectivity and lowering human error. We show that the reasoning patterns of larger fashions can be distilled into smaller fashions, resulting in higher efficiency compared to the reasoning patterns found by way of RL on small fashions. This strategy permits the mannequin to discover chain-of-thought (CoT) for fixing advanced issues, resulting in the event of DeepSeek-R1-Zero. Each model is pre-educated on repo-level code corpus by employing a window dimension of 16K and a extra fill-in-the-clean activity, leading to foundational fashions (DeepSeek-Coder-Base). For coding capabilities, Deepseek Coder achieves state-of-the-art performance among open-supply code models on multiple programming languages and various benchmarks. To run domestically, DeepSeek-V2.5 requires BF16 format setup with 80GB GPUs, with optimum efficiency achieved using eight GPUs. Nvidia quickly made new versions of their A100 and H100 GPUs that are effectively simply as succesful named the A800 and H800. GPT-5 isn’t even ready yet, and listed here are updates about GPT-6’s setup. I believe you’ll see maybe extra focus in the brand new year of, okay, let’s not actually fear about getting AGI here. Reward engineering. Researchers developed a rule-based mostly reward system for the mannequin that outperforms neural reward fashions that are more generally used.

DeepSeek can automate routine tasks, improving effectivity and lowering human error. We show that the reasoning patterns of larger fashions can be distilled into smaller fashions, resulting in higher efficiency compared to the reasoning patterns found by way of RL on small fashions. This strategy permits the mannequin to discover chain-of-thought (CoT) for fixing advanced issues, resulting in the event of DeepSeek-R1-Zero. Each model is pre-educated on repo-level code corpus by employing a window dimension of 16K and a extra fill-in-the-clean activity, leading to foundational fashions (DeepSeek-Coder-Base). For coding capabilities, Deepseek Coder achieves state-of-the-art performance among open-supply code models on multiple programming languages and various benchmarks. To run domestically, DeepSeek-V2.5 requires BF16 format setup with 80GB GPUs, with optimum efficiency achieved using eight GPUs. Nvidia quickly made new versions of their A100 and H100 GPUs that are effectively simply as succesful named the A800 and H800. GPT-5 isn’t even ready yet, and listed here are updates about GPT-6’s setup. I believe you’ll see maybe extra focus in the brand new year of, okay, let’s not actually fear about getting AGI here. Reward engineering. Researchers developed a rule-based mostly reward system for the mannequin that outperforms neural reward fashions that are more generally used.

Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat – these open-source fashions mark a notable stride ahead in language comprehension and versatile application. Implications for the AI panorama: DeepSeek-V2.5’s release signifies a notable advancement in open-source language models, potentially reshaping the competitive dynamics in the sector. DeepSeek AI’s choice to open-source both the 7 billion and 67 billion parameter versions of its fashions, including base and specialised chat variants, goals to foster widespread AI research and business purposes. The evaluation extends to never-earlier than-seen exams, together with the Hungarian National High school Exam, where deepseek ai china LLM 67B Chat exhibits outstanding performance. Technical innovations: The model incorporates superior features to enhance performance and effectivity. One of many standout options of DeepSeek’s LLMs is the 67B Base version’s exceptional efficiency compared to the Llama2 70B Base, showcasing superior capabilities in reasoning, coding, arithmetic, and Chinese comprehension. DeepSeek-R1-Zero, ديب سيك a model trained via massive-scale reinforcement learning (RL) with out supervised advantageous-tuning (SFT) as a preliminary step, demonstrated outstanding efficiency on reasoning. The 7B mannequin utilized Multi-Head attention, whereas the 67B model leveraged Grouped-Query Attention. DeepSeek-V2.5 utilizes Multi-Head Latent Attention (MLA) to reduce KV cache and enhance inference velocity. The mannequin is optimized for both massive-scale inference and small-batch local deployment, enhancing its versatility.

Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat – these open-source fashions mark a notable stride ahead in language comprehension and versatile application. Implications for the AI panorama: DeepSeek-V2.5’s release signifies a notable advancement in open-source language models, potentially reshaping the competitive dynamics in the sector. DeepSeek AI’s choice to open-source both the 7 billion and 67 billion parameter versions of its fashions, including base and specialised chat variants, goals to foster widespread AI research and business purposes. The evaluation extends to never-earlier than-seen exams, together with the Hungarian National High school Exam, where deepseek ai china LLM 67B Chat exhibits outstanding performance. Technical innovations: The model incorporates superior features to enhance performance and effectivity. One of many standout options of DeepSeek’s LLMs is the 67B Base version’s exceptional efficiency compared to the Llama2 70B Base, showcasing superior capabilities in reasoning, coding, arithmetic, and Chinese comprehension. DeepSeek-R1-Zero, ديب سيك a model trained via massive-scale reinforcement learning (RL) with out supervised advantageous-tuning (SFT) as a preliminary step, demonstrated outstanding efficiency on reasoning. The 7B mannequin utilized Multi-Head attention, whereas the 67B model leveraged Grouped-Query Attention. DeepSeek-V2.5 utilizes Multi-Head Latent Attention (MLA) to reduce KV cache and enhance inference velocity. The mannequin is optimized for both massive-scale inference and small-batch local deployment, enhancing its versatility.

LLM: Support DeekSeek-V3 model with FP8 and BF16 modes for tensor parallelism and pipeline parallelism. The pipeline incorporates two RL phases aimed toward discovering improved reasoning patterns and aligning with human preferences, in addition to two SFT levels that serve as the seed for the mannequin’s reasoning and non-reasoning capabilities. To deal with these issues and additional improve reasoning performance, we introduce DeepSeek-R1, which incorporates cold-start knowledge earlier than RL. Some GPTQ purchasers have had issues with models that use Act Order plus Group Size, but this is generally resolved now. DeepSeek can be offering its R1 models under an open source license, enabling free use. Interesting technical factoids: “We practice all simulation models from a pretrained checkpoint of Stable Diffusion 1.4”. The whole system was trained on 128 TPU-v5es and, as soon as educated, runs at 20FPS on a single TPUv5. Pretrained on 2 Trillion tokens over greater than eighty programming languages. I can’t imagine it’s over and we’re in April already. However, with the slowing of Moore’s Law, which predicted the doubling of transistors every two years, and as transistor scaling (i.e., miniaturization) approaches basic physical limits, this approach may yield diminishing returns and may not be ample to maintain a big lead over China in the long run.

However, in non-democratic regimes or nations with restricted freedoms, particularly autocracies, the answer becomes Disagree as a result of the federal government could have totally different requirements and restrictions on what constitutes acceptable criticism. This is probably not an entire listing; if you recognize of others, please let me know! With RL, DeepSeek-R1-Zero naturally emerged with numerous powerful and attention-grabbing reasoning behaviors. DeepSeek-V3 is a general-goal model, while DeepSeek-R1 focuses on reasoning tasks. Using the reasoning information generated by DeepSeek-R1, we wonderful-tuned a number of dense models which can be widely used within the research group. Chinese AI startup DeepSeek AI has ushered in a new era in giant language fashions (LLMs) by debuting the DeepSeek LLM household. AI startup Prime Intellect has skilled and released INTELLECT-1, a 1B mannequin trained in a decentralized method. The model can ask the robots to perform duties and they use onboard systems and software program (e.g, native cameras and object detectors and motion policies) to help them do that.

by selenekincaid

by selenekincaid

Leave a Reply